Unified Traceability Matrix Framework for Pod-Based QA Teams

A practical, scalable approach to connecting requirements, test coverage, defects, releases, and usability across multiple product pods—without killing pod autonomy.

Executive Summary

As organizations adopt pod- or squad-based development models, QA often fragments: each pod runs its own process, tools, and naming conventions. This creates blind spots:

Leaders can’t see end-to-end coverage across apps.

Pods struggle to align on cross-cutting journeys (checkout, auth, reporting).

Defects and usability issues are hard to trace back to business goals.

This article introduces a Unified Traceability Matrix Framework that:

Preserves pod autonomy (each pod owns its own matrix and tools).

Enforces organization-wide standards (naming, coverage metrics, dashboards).

Adds usability traceability alongside functional coverage.

You’ll get a concrete structure, example naming conventions, RACI-style ownership, coverage metrics, usability extensions, and a phased rollout plan.

Part 1: Foundation — Unified Traceability Structure

This section defines the backbone of the system: how requirements, test cases, defects, and releases relate to each other and who owns what.

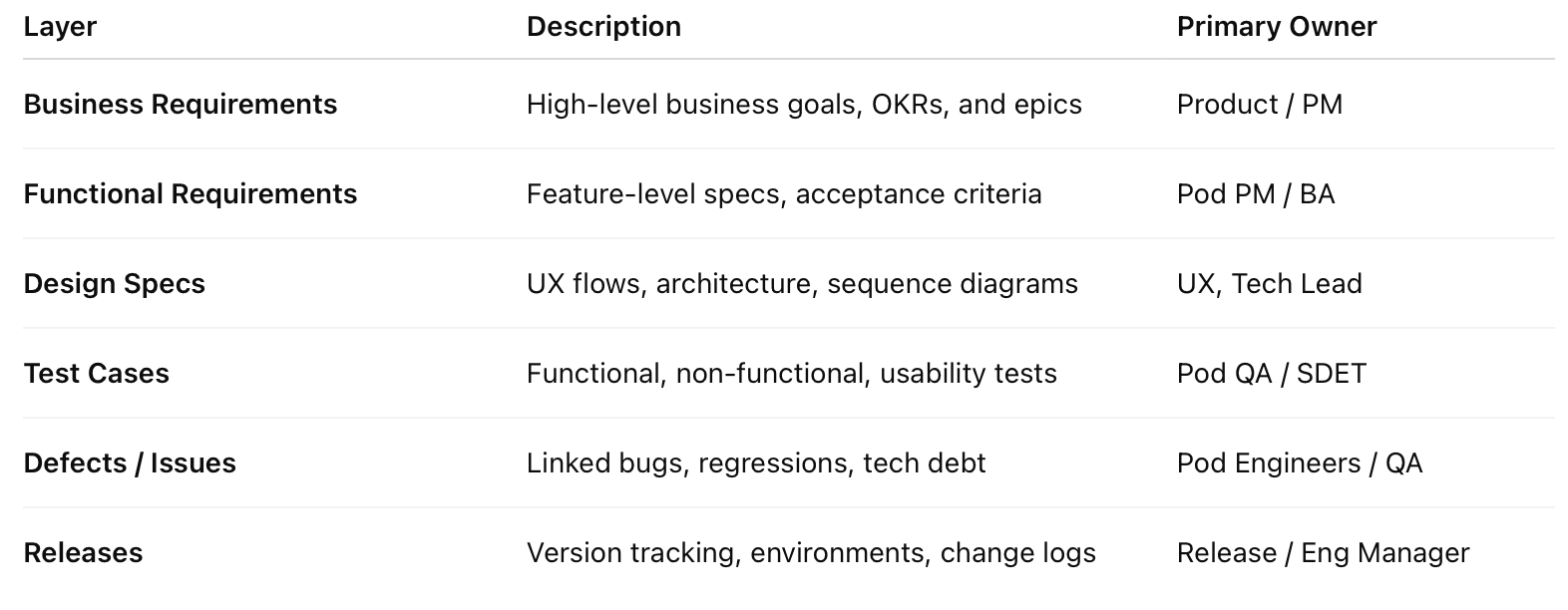

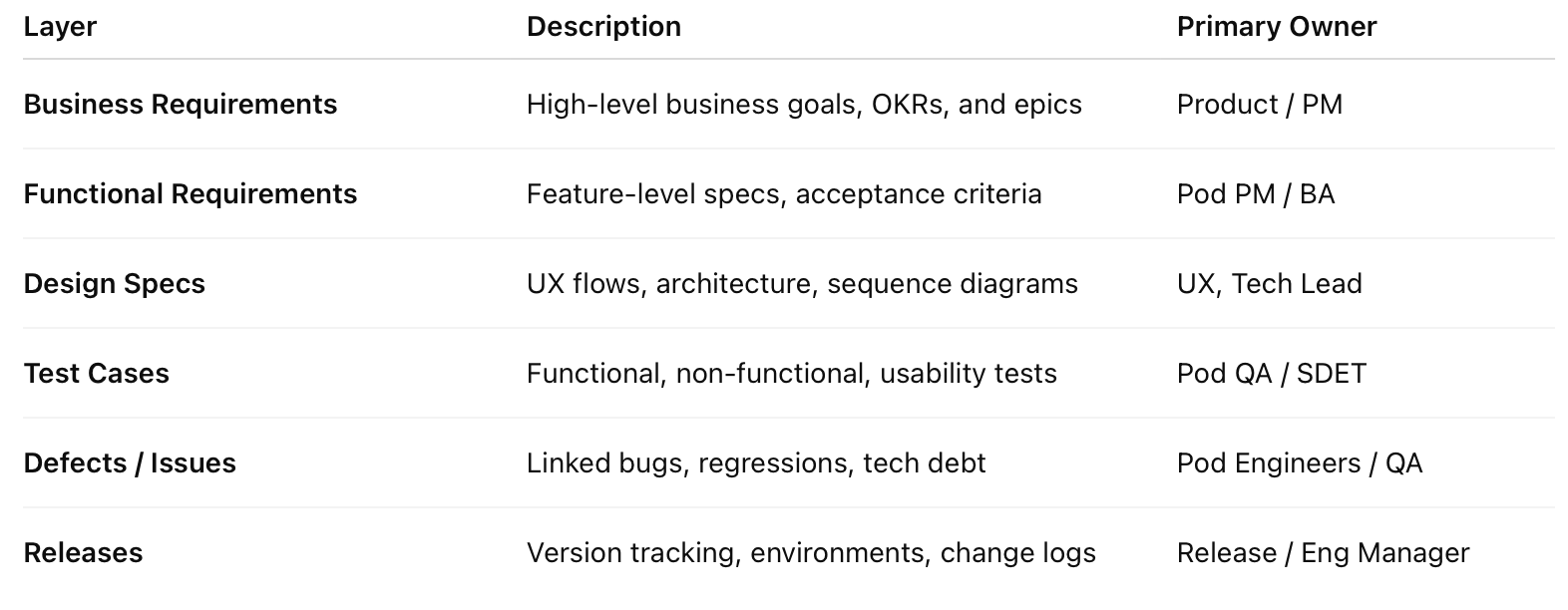

1.1 Traceability Matrix Architecture

At a minimum, your traceability model should cover:

Business intent (why we’re building something)

Functional & technical requirements (what we’re building)

Tests & defects (how we validate and learn)

Releases (when value ships and where it lands)

Core Traceability Layers

1.2 Bidirectional Traceability Model

At scale, you need to answer questions both forward and backward:

Forward: “For this requirement, what’s the test coverage and defect history?”

Backward: “For this defect in production, which business goal is at risk?”

Forward Traceability:

Requirements → Test Cases → Execution Results → Release ReadinessBackward Traceability:

Defects → Failing Test Cases → Impacted Requirements → Business Goals / OKRs

Example Impact Analysis Graph (Textual)

Goal: Increase Checkout Conversion by 5% (BR-REV-001)

└── Req: PAYMENTS-REQ-CHECKOUT-001

├── TC: PAYMENTS-TC-CHECKOUT-042 (Failing)

│ └── Defect: PAYMENTS-DEF-HIGH-007

└── Release: v3.12.0 (Blocked)This format lets leaders quickly see how a defect affects revenue-focused goals.

1.3 Standardized Naming Convention (Cross-Pod)

Consistent naming is what makes cross-pod dashboards and reports actually usable.

Naming Schema

Part 2: Unifying Traceability Across All Pods

This section describes how to coordinate across pods without centralizing everything or slowing teams down.

2.1 QA Governance Model

You need a lightweight but real governance layer to keep standards consistent.

┌────────────────────────────────────┐

│ QA GOVERNANCE COUNCIL

│ (1 QA Lead per Pod + QA Director + Release Manager + Rep from UX)

├────────────────────────────────────┤

│ Responsibilities:

│ • Define and evolve traceability standards

│ • Review cross-pod coverage gaps and critical journeys

│ • Manage shared test assets (APIs, login flows, shared services)

│ • Approve metrics, targets, and dashboards

│ • Monthly “Coverage & Risk” health reviews

└────────────────────────────────────┘

▼

┌────────────────────────────┐

│ Pod A │ Pod B │ Pod C │ Pod D │

│ (Payments) │ (Auth) │ (Dashboard)│ (Reports) │

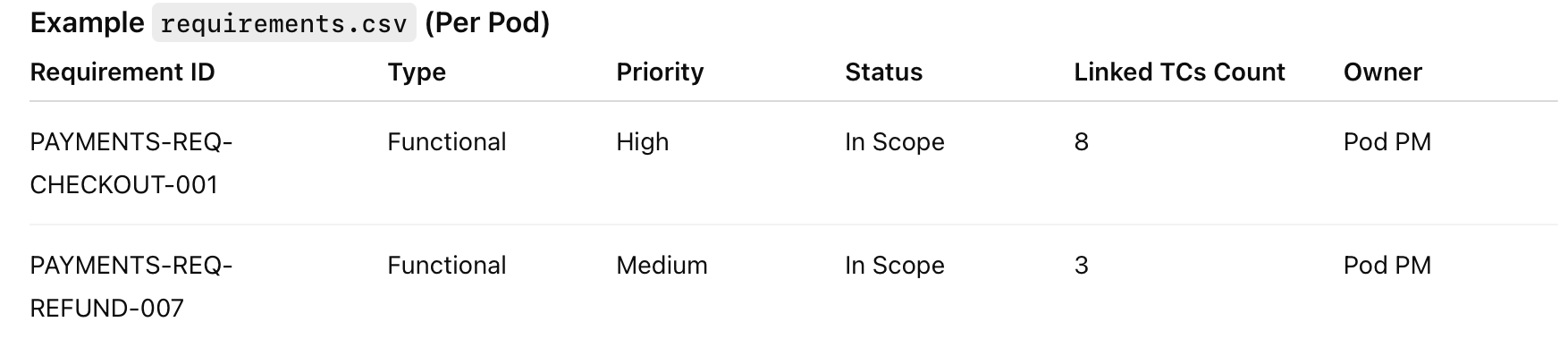

└────────────────────────────┘2.2 Shared Traceability Repository Structure

Even if pods use different tools (TestRail vs. Zephyr), you can standardize exports into a shared repository.

/traceability-matrix

├── /global

│ ├── cross-pod-requirements.md # Shared/dependent features (auth, billing)

│ ├── integration-test-mapping.md # E2E flows across pods

│ └── coverage-dashboard-config.yml # Pod IDs, thresholds, labels

├── /pods

│ ├── /pod-payments

│ │ ├── requirements.csv

│ │ ├── test-cases.csv

│ │ └── defect-linkage.csv

│ ├── /pod-auth

│ ├── /pod-dashboard

│ └── /pod-reports

└── /reports

├── weekly-coverage-summary.md

└── release-traceability-report.mdExample requirements.csv (Per Pod)

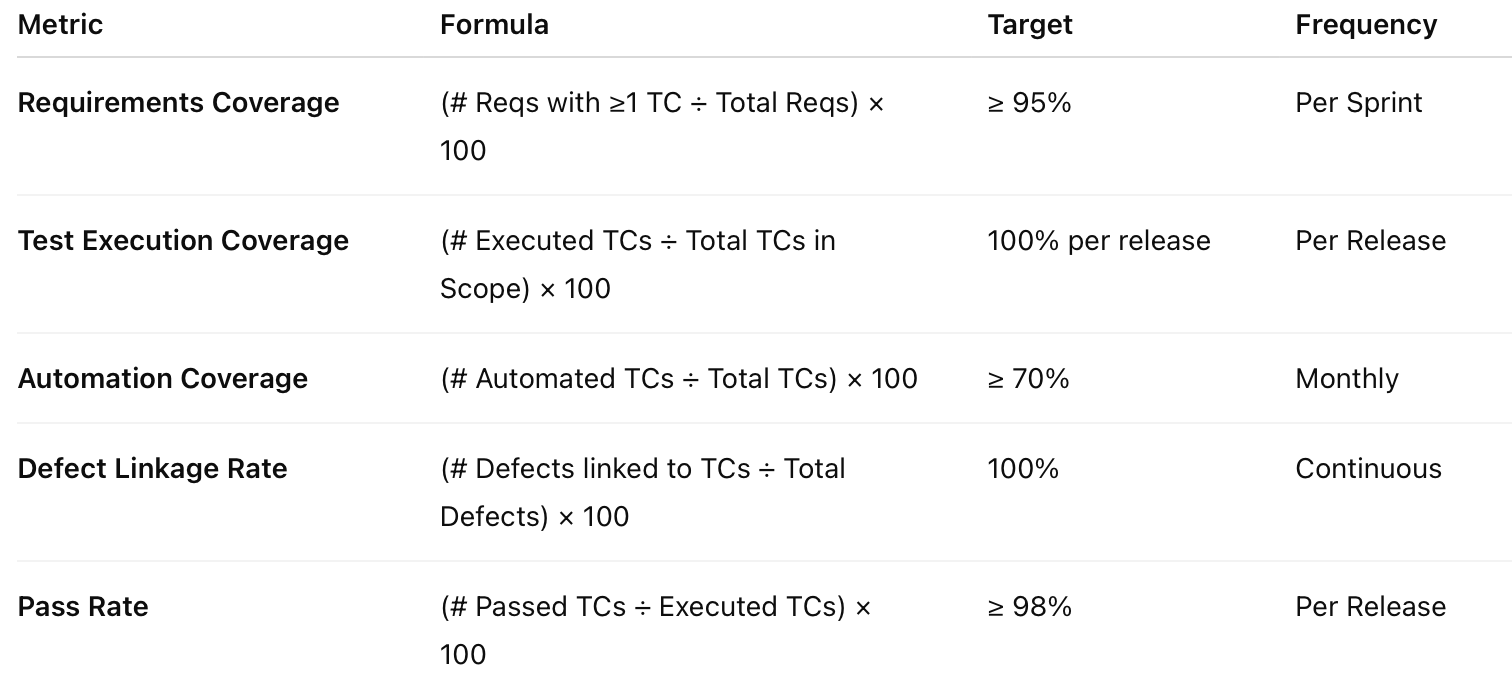

Part 3: Tracking Test Coverage Across All Apps

The goal is to move from “we think we’re covered” to quantified, per-pod and org-wide coverage.

3.1 Coverage Metrics Framework

These metrics should be standardized across all pods.

3.2 Unified Coverage Dashboard Design

Your central dashboard should show:

Org-level coverage & risk

Per-pod health

Coverage gaps and trends

You can implement this using Looker, PowerBI, Grafana, or similar, fed by nightly exports from Jira / TestRail.Key automation hooks:

Requirement creation triggers placeholder row in matrix.

Test case creation requires assigning a Requirement ID tag.

CI pipelines push results with TC IDs → dashboard aggregates by requirement.

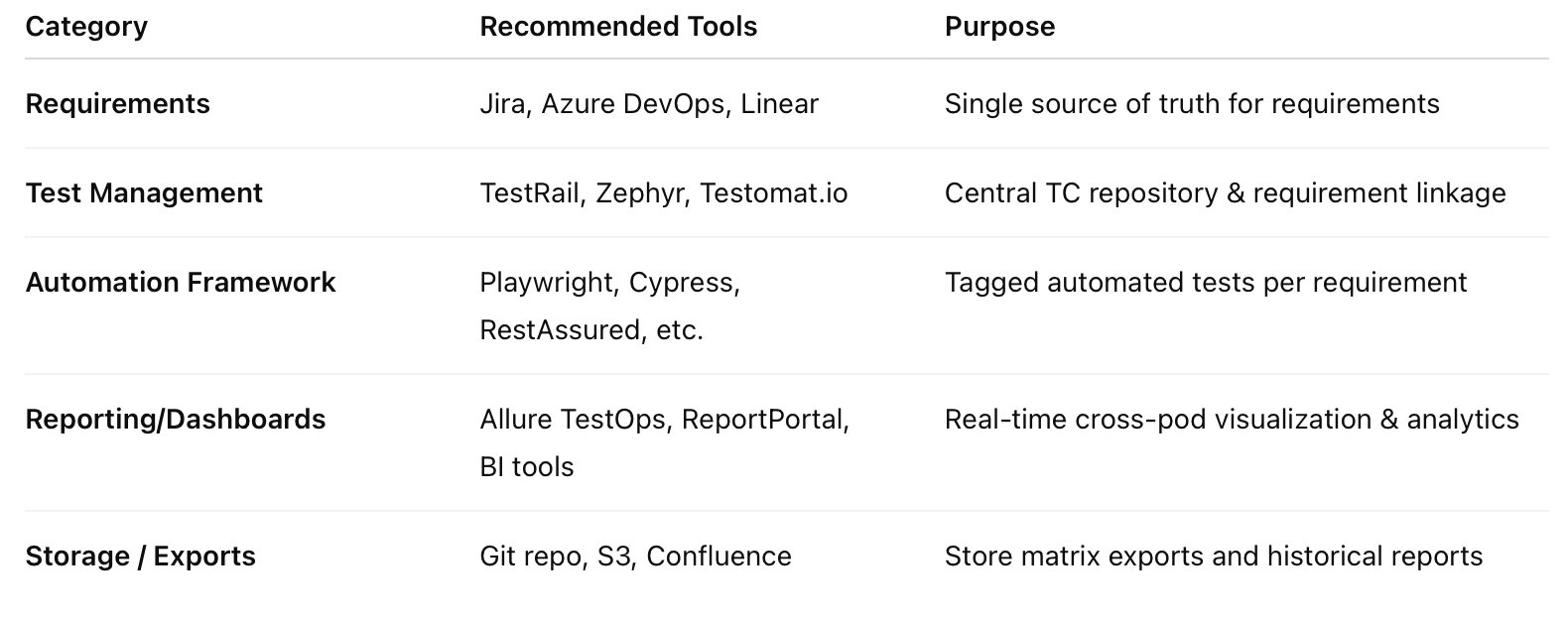

3.3 Tooling Recommendations

Use tools you already have—just enforce consistent linking and exports.

Part 4: Tracking Usability (Not Just “Does It Work?”)

Most traceability matrices stop at “functional correctness.” This framework extends traceability to usability and user experience, which often drive real business outcomes.

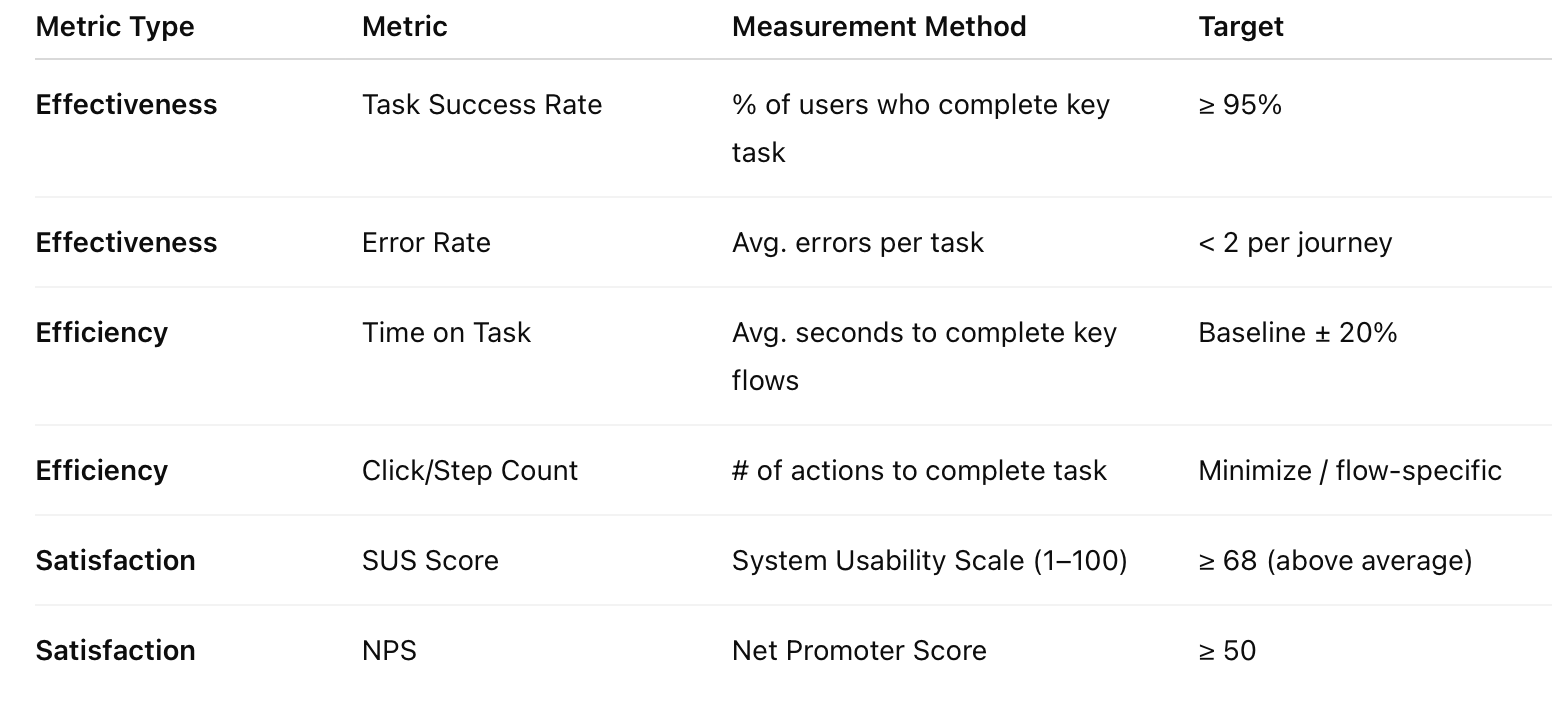

4.1 Usability Metrics Integration

Add measurable UX outcomes that can be tied back to features and releases.

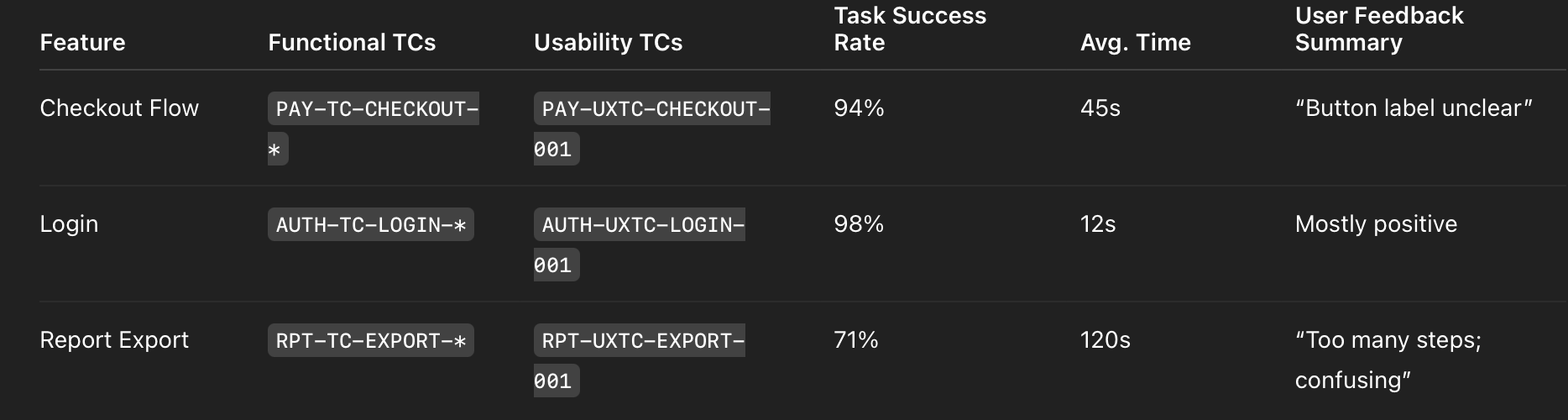

4.2 Usability Traceability Matrix Extension

Add usability-specific columns to your existing matrix.

Workflow: From Usability Study to Traceability

Design/UX defines tasks & success criteria.

QA creates Usability TCs (UXTC) referencing corresponding requirements.

UX or research team runs tests (lab, remote, analytics-based).

Results (success rate, time, feedback) are logged against UXTC IDs.

Coverage dashboard surfaces features with poor usability despite passing functional tests.

Part 5: Implementation Roadmap

A realistic rollout plan across ~3 months, plus ongoing improvement.

Phase 1: Foundation (Weeks 1–4)

Goals: Align on language, structure, and ownership.

Agree on naming conventions across all pods.

Define minimum coverage thresholds (per pod, per product).

Set up central traceability repository structure.

Form the QA Governance Council and schedule monthly reviews.

Phase 2: Tooling & Integration (Weeks 5–8)

Goals: Make traceability automatic, not manual.

Configure or standardize on a test management tool.

Integrate test runs into CI/CD with TC IDs + requirement tags.

Build the unified dashboard (org-wide + per-pod views).

Automate requirement-to-TC linking (via tags).

Phase 3: Usability Integration (Weeks 9–12)

Goals: Extend from “does it work?” to “is it usable?”

Define usability metrics per pod and globally.

Create usability test case templates (UXTC) with clear tasks & success criteria.

Establish a rotating usability testing schedule (e.g., 1 pod per sprint).

Link usability findings into the traceability matrix and dashboards.

Phase 4: Continuous Improvement (Ongoing)

Goals: Keep the framework alive and evolving.

Monthly coverage health reviews (by pod + cross-pod).

Quarterly cross-pod journey tests (e.g., signup → checkout → reporting).

Retrospectives on traceability gaps and false confidence.

Gradual automation coverage expansion based on risk and ROI.

Continuous Improvement Loop

Measure → Review → Prioritize → Improve → Re-measure

↑ │

└─────────────────────┘Key Success Factors

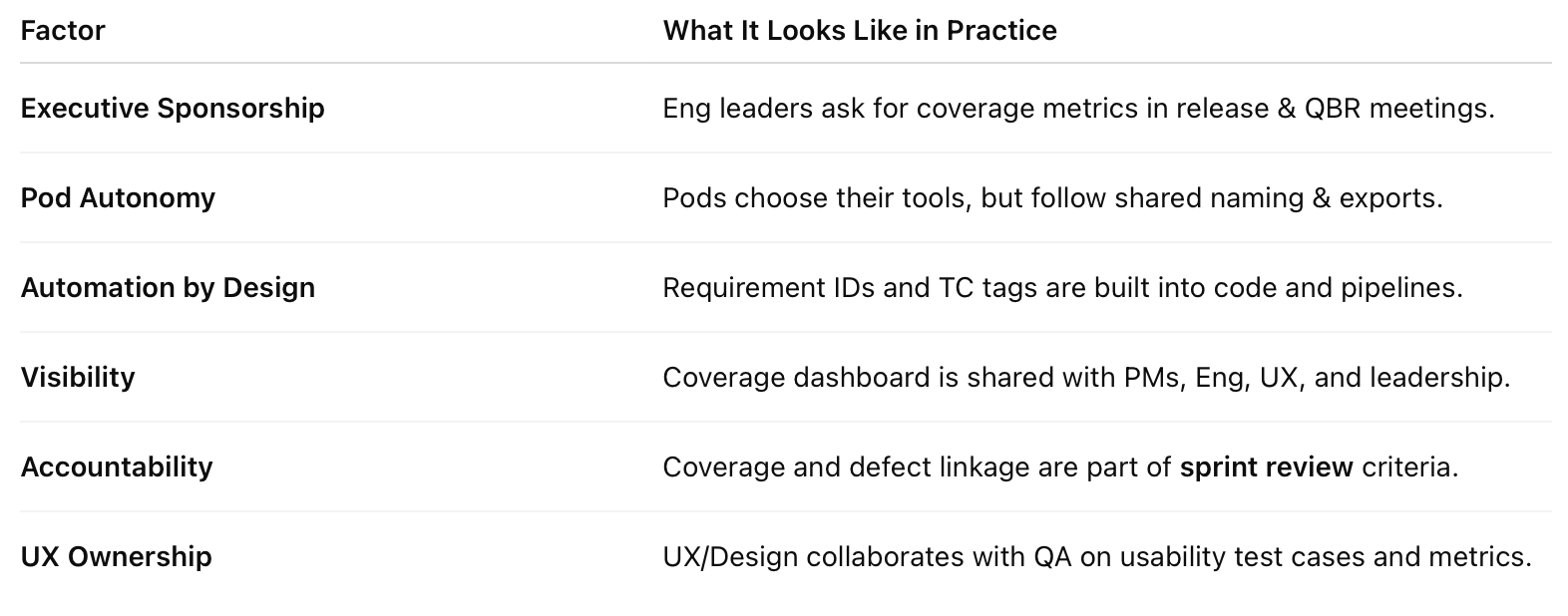

Conclusion

A unified traceability matrix for pod-based QA teams is not just a documentation exercise—it’s an operating model:

Pods keep their speed and autonomy.

Leadership gains clear visibility into risk, coverage, and usability.

Business stakeholders can finally ask, “Are we ready to ship?” and get an answer grounded in data, not intuition.

By standardizing naming, automating links between requirements and tests, expanding into usability metrics, and backing everything with a light but firm governance model, you create a system that scales with your organization instead of slowing it down.